Ramblings about statistics, AI, and psychology.

-

Predicting EVE Online item sales (BVM Data Science Cup 2017)

This year, the BVM (German professional association for market and social researchers), hosted their first Data Science Cup. There were four tasks involving the prediction of sales data for the online sci-fi game “EVE Online”. It was my first year working in market research and applying statistics and machine learning algorithms in a real-world context.…

-

Thoughts on the Universality of Psychological Effects

Most discussed and published findings from psychological research claim universality in some way. Especially for cognitive psychology it is the underlying assumption that all human brains work similarly — an assumption not unfounded at all. But also findings from other fields of psychology such as social psychology claim generality across time and place. It is…

-

Critiquing Psychiatric Diagnosis

I came across this great post at the Mind Hacks blog by Vaughan Bell, which is about how we talk about psychiatric diseases, their diagnosis and criticising their nature. Debating the validity of diagnoses is a good thing. In fact, it’s essential we do it. Lots of DSM diagnoses, as I’ve argued before, poorly predict…

-

Stop the “Flipping”

I came across this interesting article at The Thesis Whisperer blog. It starts with the hypothesis being an academic is similar to “running a small, not very profitable business”. This is mainly down to two problems: Problem one: There are a lot of opportunities that could turn into nothing, so it’s best to say yes…

-

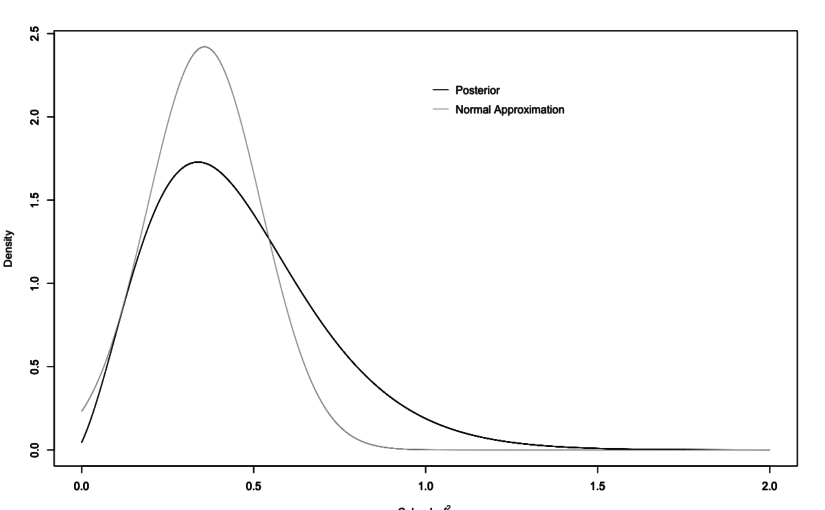

New Preprint: A Bayes Factor for Replications of ANOVA Results

Already some weeks ago I have finished up some thoughts for a Replication Bayes factor for ANOVA contexts, which resulted in a manuscript that is available as pre-print at arXiv. The theoretical foundation was laid out before by Verhagen & Wagenmakers (2014) and my manuscript is mainly an extension of their approach. We have another paper…

-

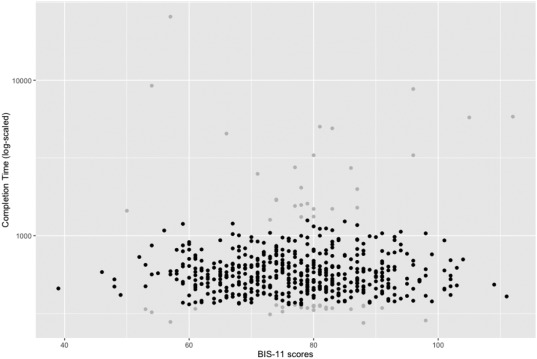

New Paper: Impulsivity and Completion Time in Online Questionnaires

I’ve got my first first-author-paper published in Personality and Individual Differences. The paper is titled “Reliability and completion speed in online questionnaires under consideration of personality” (doi:10.1016/j.paid.2017.02.015) and was written together with Lina and Christian.

-

Research is messy: Two cases of pre-registrations

Pre-registrations are becoming increasingly important for studies in psychological research. This is a much needed change since part of the “replication crisis” has to do with too much flexibility in data analysis and interpretation (p-hacking, HARK’ing and the like). Pre-registering a study with planned sample size and planned analyses allows other researchers to understand what…

-

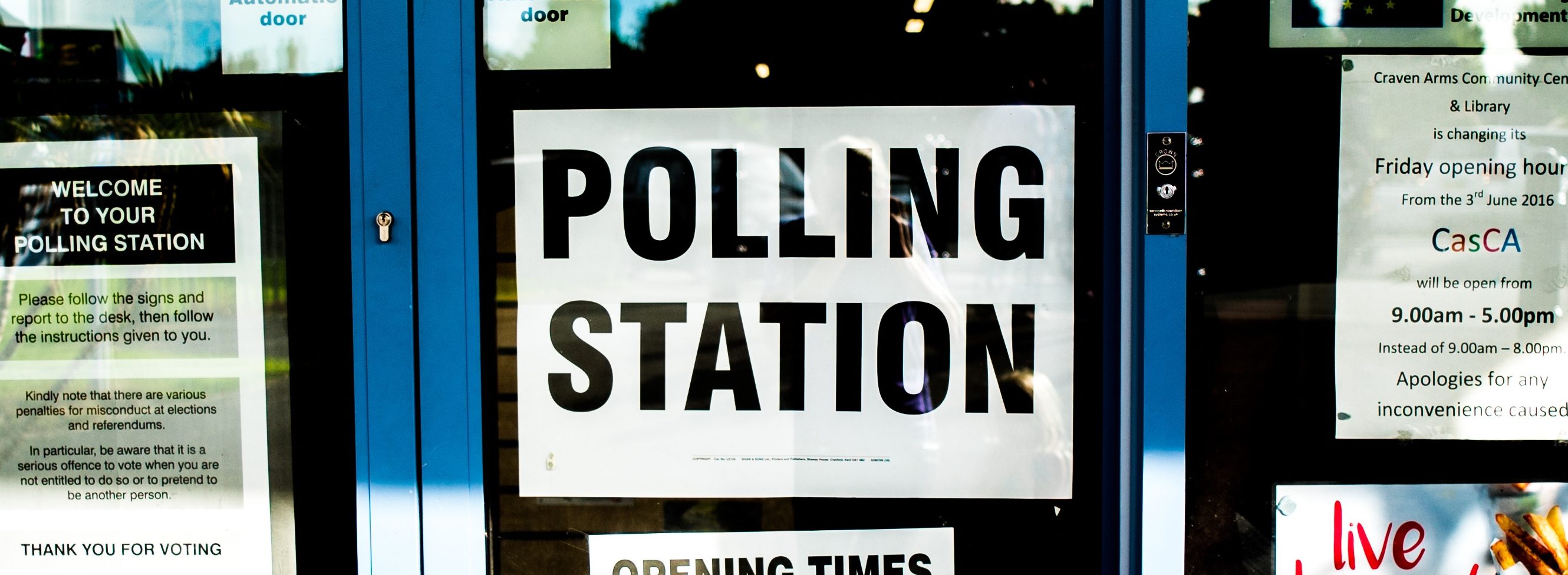

How statistics lost their power – and why we should fear what comes next

This is an interesting article from The Guardian on “post-truth” politics, where statistics and “experts” are frowned upon by some groups. William Davies shows how statistics in the political debate have evolved from the 17th century until today, where statistics are not regarded as an objective approach to reality anymore but as an arrogant and…

-

Predictions for Presidential Elections Weren’t That Bad

Nathan Silver’s FiveThirtyEight has had an excellent coverage of the US Presidential Elections with some great analytical pieces and very interesting insights in their models. Each and every poll predicted Hillary Clinton to win the election and FiveThirtyEight was no exception to that. Consequently, there was a lot of discussion on pollsters, their methods and…

-

New Paper: Reliability Estimates for Three Factor Score Estimators

Just a short post on a new paper that is available from our department. If you happen to have calculated factor score estimators after factor analysis, e.g. using Thurstone’s Regression Estimators, you might be interested in the reliability of the resulting scores. Our paper explains how to do this, compares the reliability of three different factor…

Got any book recommendations?