Ramblings about statistics, AI, and psychology.

-

New Preprint: Making “Null Effects” Informative

In February and March this year, I stayed at the Eindhoven Technical University in the amazing group with Daniël Lakens, Anne Scheel and Peder Isager, who are actively researching questions of replicability in psychological science. Over the two months I have learned a lot, exchanged some great ideas with the three of them – and…

-

Workshop “Einführung in die Datenanalyse mit R” (Post and Slides in German)

Last weekend, I gave a 1.5 day workshop for students at my university on data analysis using R. In this post I briefly share my experience along with the workshop slides and an example project – both of which are in German. If you are looking for an English introduction into R, have a look…

-

Update on the Replication Bayes Factor

In December I already blogged about the ReplicationBF package, I made available on GitHub. It allows you to calculate Replication Bayes Factors for t- and F-tests. The preprint detailing the formulas for the latter was outdated and the method in the package was not optimal, so I recently updated both.

-

Using Topic Modelling to learn from Open Questions in Surveys

Another presentation I gave at the General Online Research (GOR) conference in March1, was on our first approach to using topic modelling at SKOPOS: How can we extract valuable information from survey responses to open-ended questions automatically? Unsupervised learning is a very interesting approach to this question — but very hard to do right.

-

Replicability in Online Research

At the GOR conference in Cologne two weeks ago, I had the opportunity to give a talk on replicability in Online Research. As a PhD student researching this topic and working as a data scientist in market research, I was very happy to have the opportunity to give my thoughts on how the debate in…

-

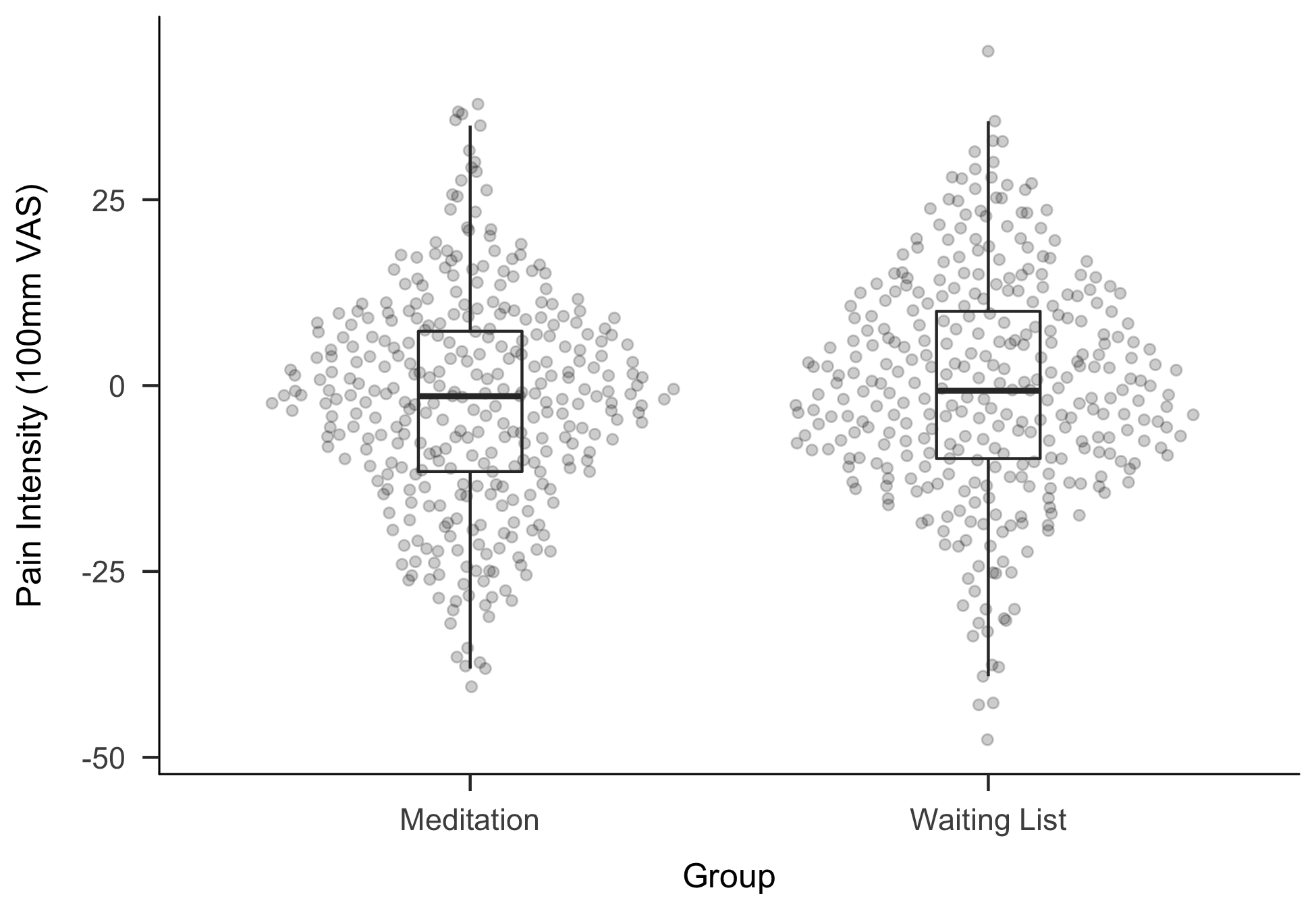

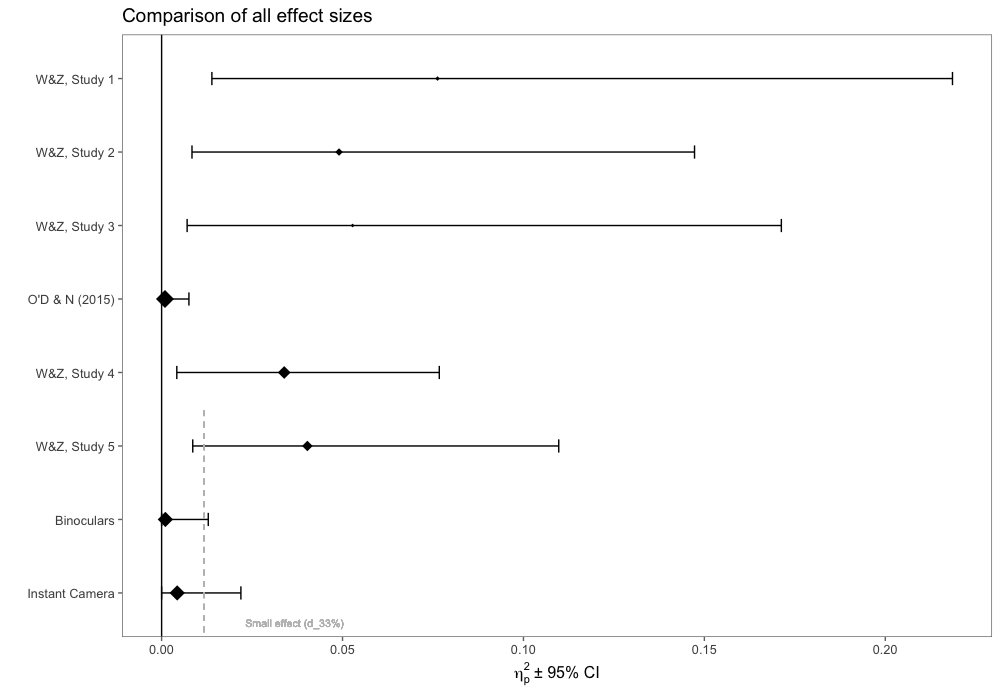

New Preprint: Does it Actually Feel Right?

In a recent post, I mentioned a replication study we performed. We have now finalised the manuscript and uploaded it as a pre-print to PsyArXiv. Update (25.04.2018): The paper is now published at Royal Society Open Science and available here.

-

p-hacking destroys everything (not only p-values)

In the context of problems with replicability in psychology and other empirical fields, statistical significance testing and p-values have received a lot of criticism. And without question: much of the criticism has its merits. There certainly are problems with how significance tests are used and p-values are interpreted.1 However, when we are talking about “p-hacking”,…

-

Introduction to Bayesian Statistics (Slides in German)

Recently, I had the opportunity to give a lecture on Bayesian statistics to a semester of Psychology Master students at the University of Bonn. The slides, which are in German, I’d like to share here for interested readers.

-

ReplicationBF: An R-Package to calculate Replication Bayes Factors

Some months ago I’ve written a manuscript how to calculate Replication Bayes factors for replication studies involving F-tests as is usually the case for ANOVA-type studies. After a first round of peer review, I have revised the manuscript and updated all the R scripts. I have a written a small R-Package to have all functions…

Got any book recommendations?