Tag: science

-

Leaving Academia: Goodbye, cruel world!

In September, my contract as a research assistant at the University of Bonn ended. I was lucky to have a 50% contract for three years and even more lucky that I had the option to extend the contract for another year. Nevertheless, I will leave academia as I’m close to finishing by PhD thesis and…

-

Why “Prestige” is Better Than Your h-Index

Psychological science is one of the fields that is undergoing drastic changes in how we think about research, conduct studies and evaluate previous findings. Most notably, many studies from well-known researchers are under increased scrutiny. Recently, journalists and researchers have reviewed the Stanford Prison Experiment that is closely associated with the name of Philip Zimbardo.…

-

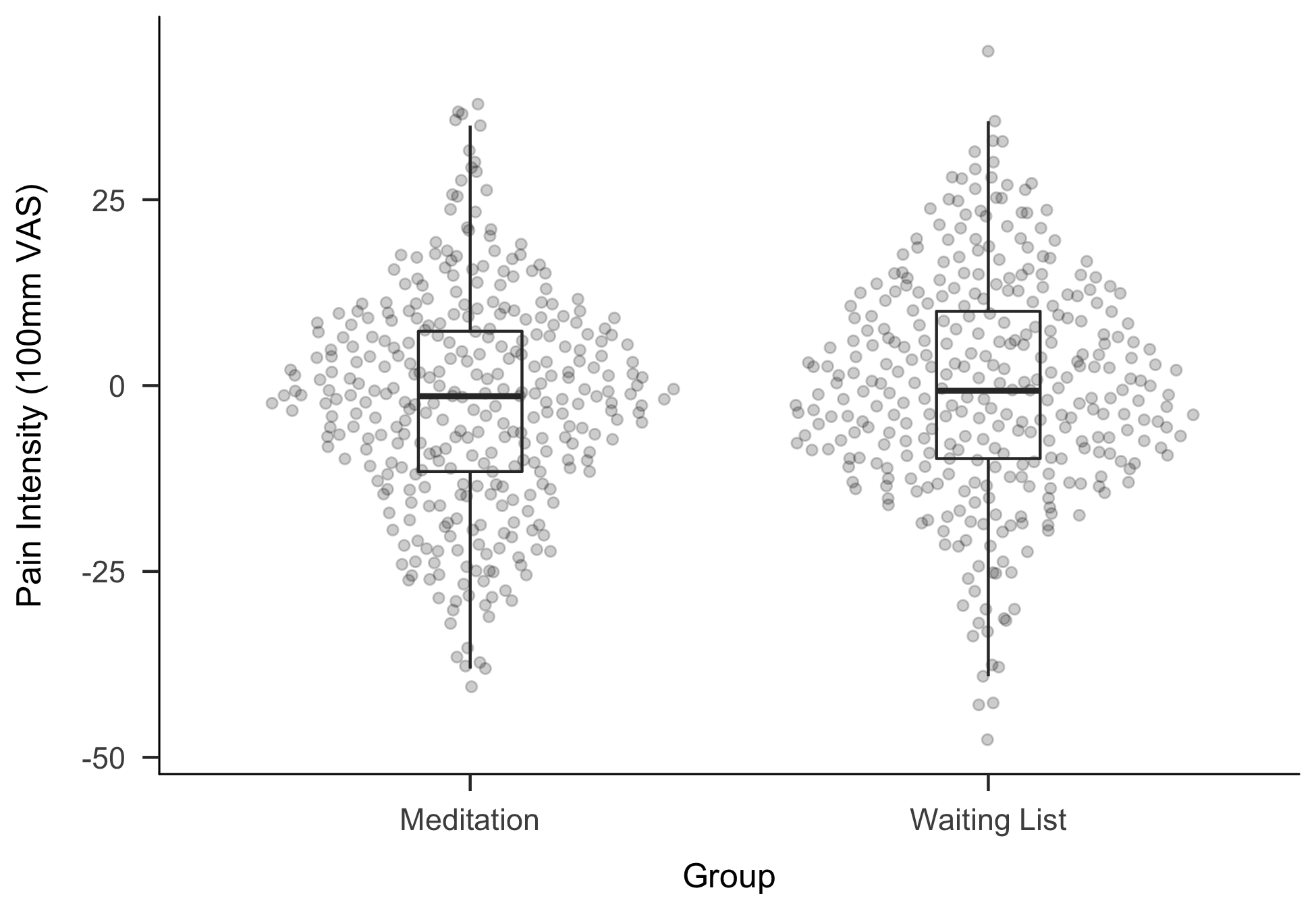

New Preprint: Making “Null Effects” Informative

In February and March this year, I stayed at the Eindhoven Technical University in the amazing group with Daniël Lakens, Anne Scheel and Peder Isager, who are actively researching questions of replicability in psychological science. Over the two months I have learned a lot, exchanged some great ideas with the three of them – and…

-

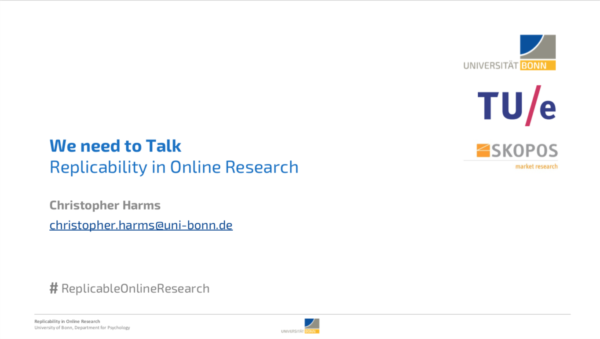

Replicability in Online Research

At the GOR conference in Cologne two weeks ago, I had the opportunity to give a talk on replicability in Online Research. As a PhD student researching this topic and working as a data scientist in market research, I was very happy to have the opportunity to give my thoughts on how the debate in…

-

p-hacking destroys everything (not only p-values)

In the context of problems with replicability in psychology and other empirical fields, statistical significance testing and p-values have received a lot of criticism. And without question: much of the criticism has its merits. There certainly are problems with how significance tests are used and p-values are interpreted.1 However, when we are talking about “p-hacking”,…

-

Thoughts on the Universality of Psychological Effects

Most discussed and published findings from psychological research claim universality in some way. Especially for cognitive psychology it is the underlying assumption that all human brains work similarly — an assumption not unfounded at all. But also findings from other fields of psychology such as social psychology claim generality across time and place. It is…

-

Critiquing Psychiatric Diagnosis

I came across this great post at the Mind Hacks blog by Vaughan Bell, which is about how we talk about psychiatric diseases, their diagnosis and criticising their nature. Debating the validity of diagnoses is a good thing. In fact, it’s essential we do it. Lots of DSM diagnoses, as I’ve argued before, poorly predict…

-

How statistics lost their power – and why we should fear what comes next

This is an interesting article from The Guardian on “post-truth” politics, where statistics and “experts” are frowned upon by some groups. William Davies shows how statistics in the political debate have evolved from the 17th century until today, where statistics are not regarded as an objective approach to reality anymore but as an arrogant and…

-

Michael Inzlicht on loosing faith in science

Michael Inzlicht has posted an article on his blog about how he lost faith in psychological science after reading the now infamous paper on “false-positive psychology”. It is interesting for me to note that my experience is somewhat different.

-

Replicability, Data Quality and Bayesian Methods

On the About page I wrote, that I blog about things I come across while researching for my PhD. So, you may very well ask what this PhD is supposed to be about. For the interested reader — researchers and the uninitiated alike —, here is some overview on my current plans and research focus.