Category: Science

-

What AI tells us about human psychology

The tech world won’t stop telling us how AI will revolutionize labor, displace workers, and surpass human intelligence — either today or in the near future. In short: AI is amazing, and we should be grateful that Silicon Valley has blessed us with this high-end technology we’ll all learn to love. All hail our AI…

-

How to do stepwise regression in R?

You don’t.

-

Leaving Academia: Goodbye, cruel world!

In September, my contract as a research assistant at the University of Bonn ended. I was lucky to have a 50% contract for three years and even more lucky that I had the option to extend the contract for another year. Nevertheless, I will leave academia as I’m close to finishing by PhD thesis and…

-

Why “Prestige” is Better Than Your h-Index

Psychological science is one of the fields that is undergoing drastic changes in how we think about research, conduct studies and evaluate previous findings. Most notably, many studies from well-known researchers are under increased scrutiny. Recently, journalists and researchers have reviewed the Stanford Prison Experiment that is closely associated with the name of Philip Zimbardo.…

-

Submission Criteria for Psychological Science

Daniël told me about this the other day: Our recent pre-print on informative ‘null effects’ is now cited in the submission criteria for Psychological Science in a paragraph on drawing inferences from ‘theoretically significant’ results that may not be ‘statistically significant’. I feel very honoured that the editorial board at PS considers our manuscript as…

-

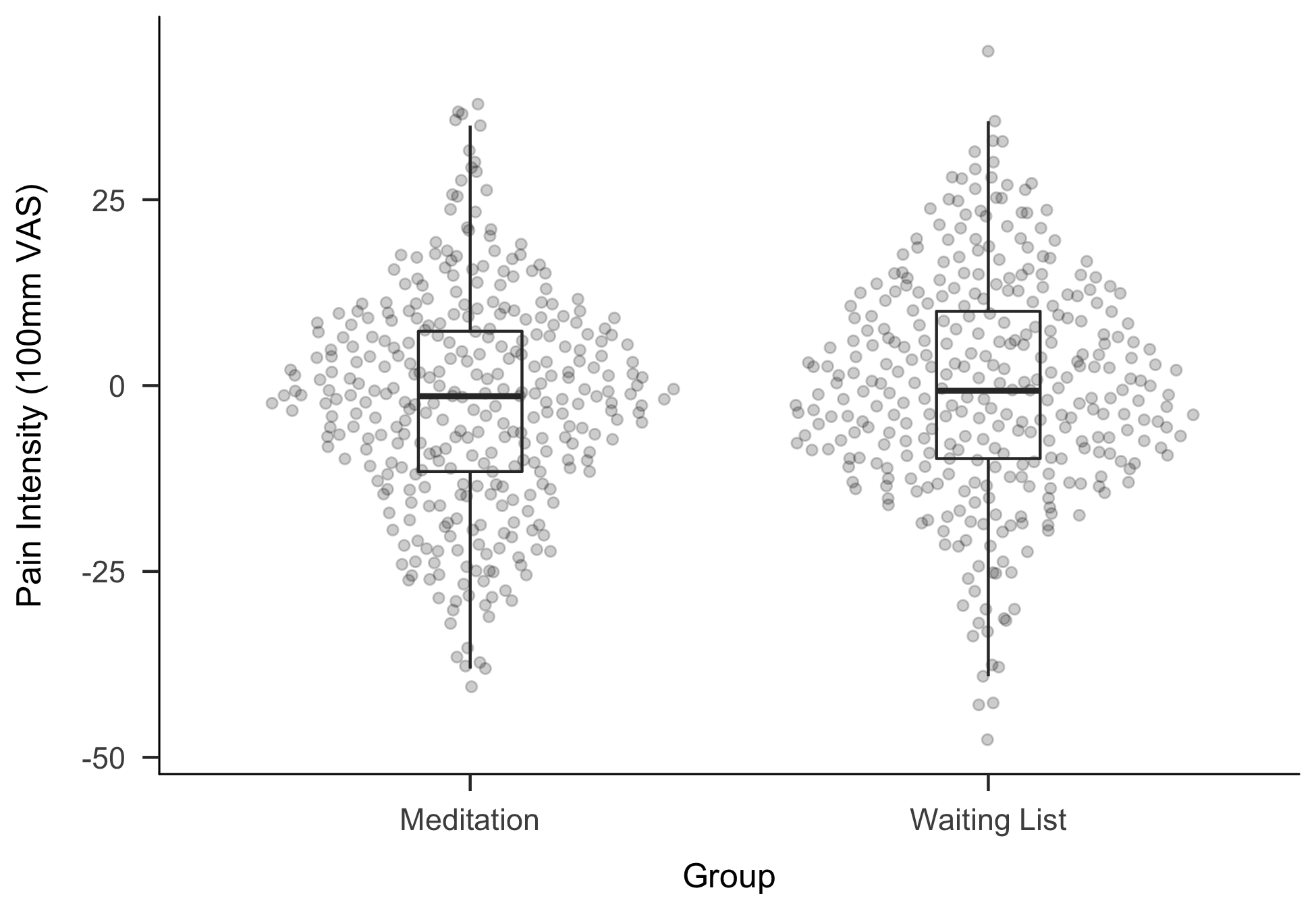

New Preprint: Making “Null Effects” Informative

In February and March this year, I stayed at the Eindhoven Technical University in the amazing group with Daniël Lakens, Anne Scheel and Peder Isager, who are actively researching questions of replicability in psychological science. Over the two months I have learned a lot, exchanged some great ideas with the three of them – and…

-

Update on the Replication Bayes Factor

In December I already blogged about the ReplicationBF package, I made available on GitHub. It allows you to calculate Replication Bayes Factors for t- and F-tests. The preprint detailing the formulas for the latter was outdated and the method in the package was not optimal, so I recently updated both.

-

Using Topic Modelling to learn from Open Questions in Surveys

Another presentation I gave at the General Online Research (GOR) conference in March1, was on our first approach to using topic modelling at SKOPOS: How can we extract valuable information from survey responses to open-ended questions automatically? Unsupervised learning is a very interesting approach to this question — but very hard to do right.

-

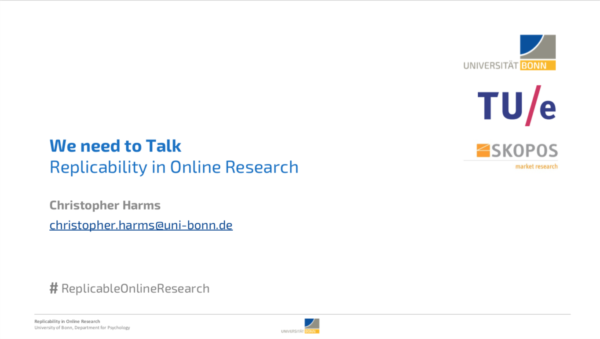

Replicability in Online Research

At the GOR conference in Cologne two weeks ago, I had the opportunity to give a talk on replicability in Online Research. As a PhD student researching this topic and working as a data scientist in market research, I was very happy to have the opportunity to give my thoughts on how the debate in…

-

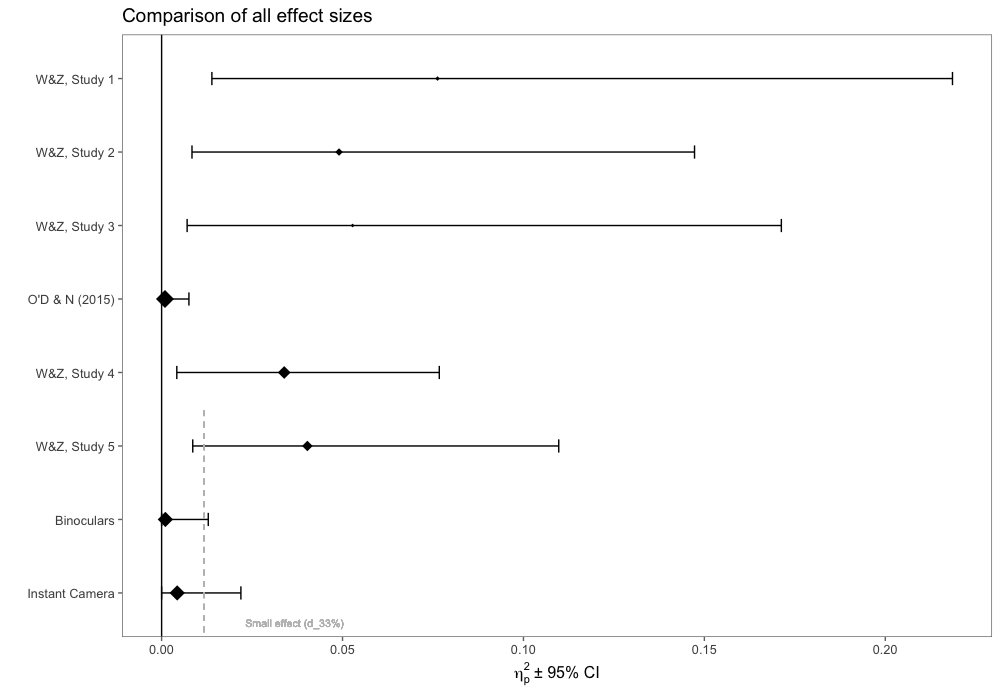

New Preprint: Does it Actually Feel Right?

In a recent post, I mentioned a replication study we performed. We have now finalised the manuscript and uploaded it as a pre-print to PsyArXiv. Update (25.04.2018): The paper is now published at Royal Society Open Science and available here.