Category: Science

-

How statistics lost their power – and why we should fear what comes next

This is an interesting article from The Guardian on “post-truth” politics, where statistics and “experts” are frowned upon by some groups. William Davies shows how statistics in the political debate have evolved from the 17th century until today, where statistics are not regarded as an objective approach to reality anymore but as an arrogant and…

-

Predictions for Presidential Elections Weren’t That Bad

Nathan Silver’s FiveThirtyEight has had an excellent coverage of the US Presidential Elections with some great analytical pieces and very interesting insights in their models. Each and every poll predicted Hillary Clinton to win the election and FiveThirtyEight was no exception to that. Consequently, there was a lot of discussion on pollsters, their methods and…

-

New Paper: Reliability Estimates for Three Factor Score Estimators

Just a short post on a new paper that is available from our department. If you happen to have calculated factor score estimators after factor analysis, e.g. using Thurstone’s Regression Estimators, you might be interested in the reliability of the resulting scores. Our paper explains how to do this, compares the reliability of three different factor…

-

Confidence Intervals for Noncentrality Parameters

In recent years, it has become a notion to not only report point estimates of effect sizes, but also confidence intervals for said effect sizes. I have created a small R script to calculate the bounds of such a confidence interval in the case of t- and F-distributions.

-

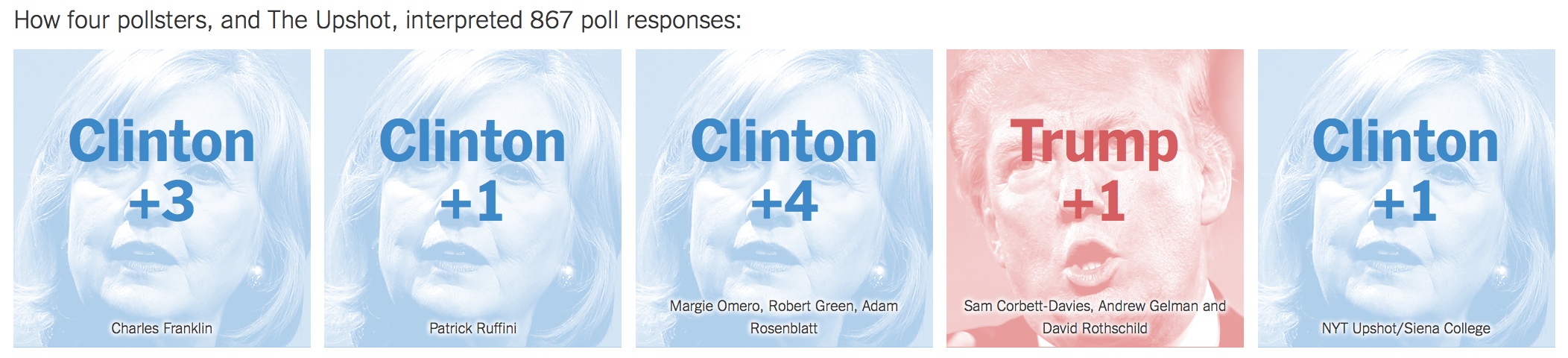

Differences in Pollster Predictions

The New York Times published an interesting piece on the differences between pollsters’ predictions. All five predictions used the same data set, so sampling differences are not of concern. Still, there was a difference of up to 5% between the predictions.

-

Michael Inzlicht on loosing faith in science

Michael Inzlicht has posted an article on his blog about how he lost faith in psychological science after reading the now infamous paper on “false-positive psychology”. It is interesting for me to note that my experience is somewhat different.

-

Fraud in Medical Research

Already in September last year, Der Spiegel published an interview with Peter Wilmshurst, a British medical doctor and whistleblower who made fraudulent practices in medical research public. A very interesting article that’s worth reading.

-

Euro Cup predictions through Stan

After I calculated the probabilities of Germany dropping out of the world cup two years ago, I always wanted to do some Bayesian modeling for the Bundesliga or the Euro Cup that started yesterday. Unfortunately, I never came to it. But Andrew Gelman posted some model by Leonardo Egidi today on his blog: Leonardo Egidi…

-

Choosing Cut-Offs in Tests

My last blog post was on the difference between Sensitivity, Specificity and the Positive Predictive Value. While showing that a positive test result can represent a low probability of actually having a trait or a disease, this example used the values of Sensitivity and Specificity as pre-known input. For established tests and measures they indeed…

-

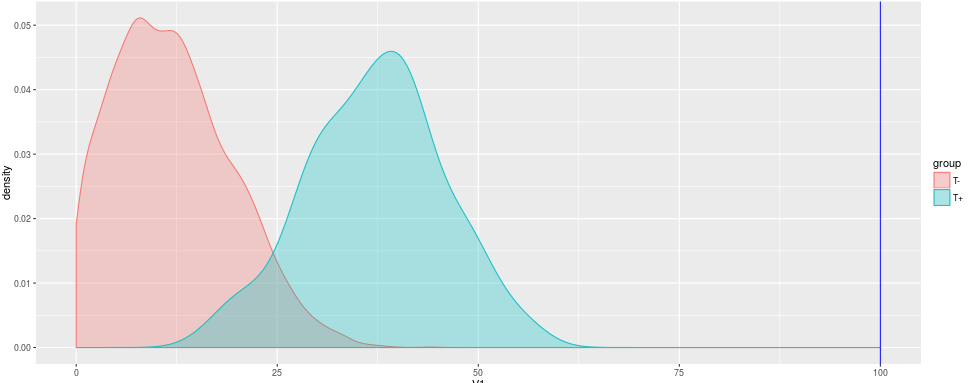

Visualizing Sensitivity and Specificity of a Test

In my university course on Psychological Assessment, I recently explained the different quality criteria of a test used for dichotomous decisions (yes/no, positive/negative, healthy/sick, …). A quite popular example in textbooks is the case of cancer screenings, where an untrained reader might be surprised by the low predictive value of a test. I created a…